- Published on

Quantum Platforms: A Comparison

The three leading platforms (in 2024)

As quantum computing is still in its early stages, numerous platforms are being explored, each competing to become the leading technology in the field. Among the various approaches, three have emerged as front-runners: trapped ions, superconducting qubits, and neutral atoms.

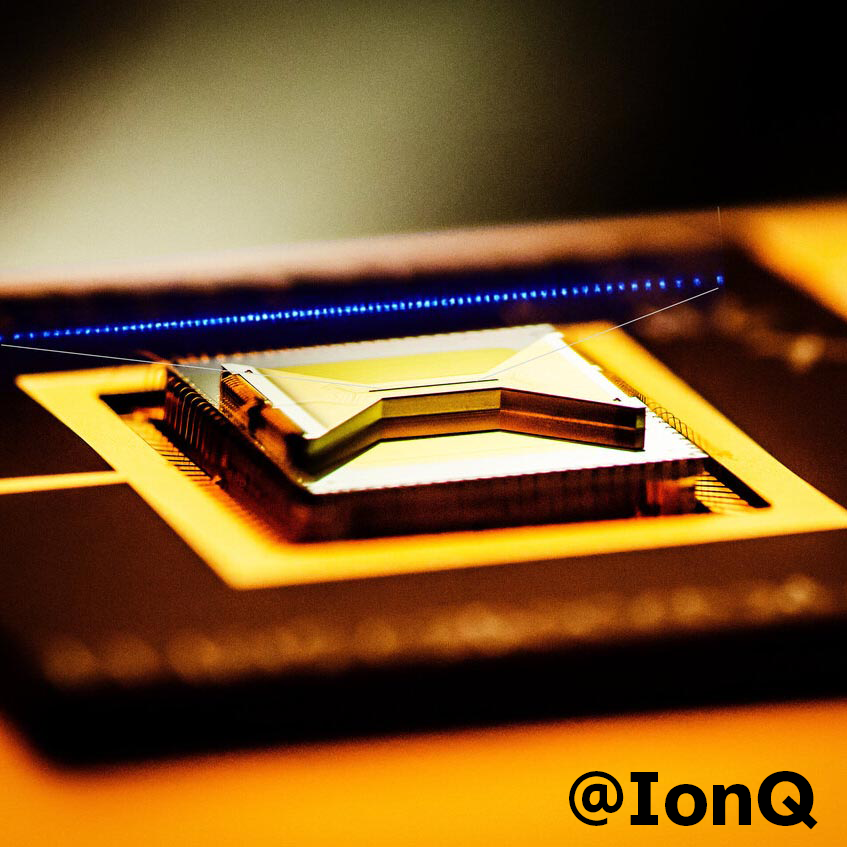

Trapped Ions

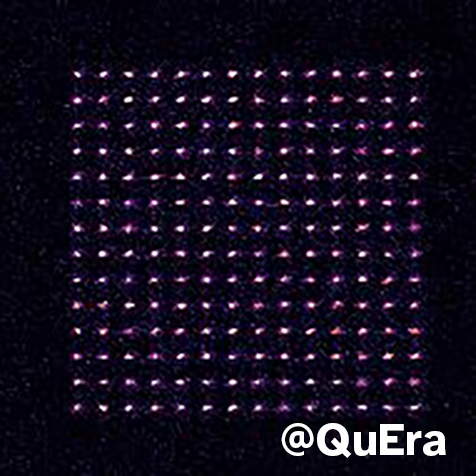

Neutral Atoms

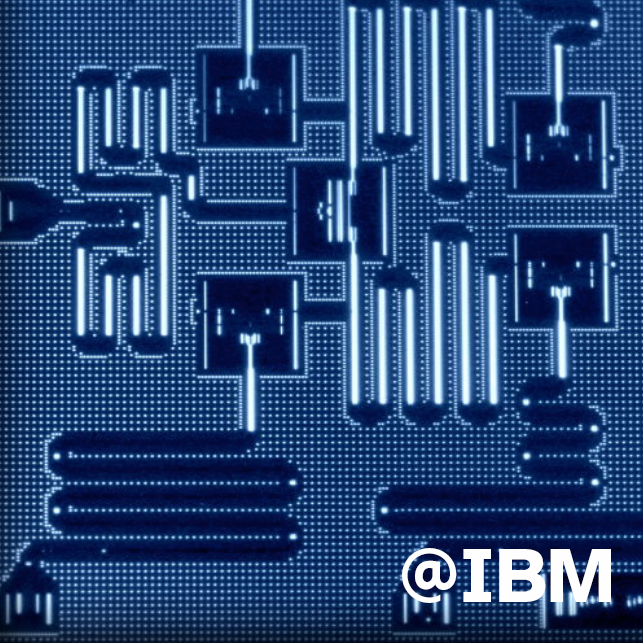

SQUIDs

Each of these technologies offers unique advantages and challenges. Here I want to present a comparison of the last advancements of two-qubits gates fidelities for these three platforms.

Vs.

There are countless metrics available to compare different quantum computing platforms, and unsurprisingly, each metric tends to favor a specific platform. Naturally, those working in that field often highlight the metric that puts their platform in the best light. For instance, IBM introduced the concept of quantum volume in 2018, aiming to create a single-number metric that factors in scalability, precision, and connectivity. However, as they began to fall behind in this metric (with the current record held by Quantinuum), it has quietly fallen out of favor and is rarely mentioned in IBM's presentations.

In this discussion, I'd like to provide a clearer picture of where the field stands today. These days, the focus seems to be on scalablity, so let’s start there.

Scalability

Between all the platforms I believe that trapped ions are the ones struggling more in this aspect. We can trace back the origin of this problem to the connectivity one. Systems with high connectivity have inherently stronger interactions between qubits.

NOTE: You might wonder why experiments in the early 2010s had similar/more qubits that nowadays experiments. However, these were experiments with limited degree of control on these qubits. A more fair comparison would include data only from ~2016 onwards.

A scalability catch

The previous plot looks quite scattered and discontinous. This because the sistem size is not a fair metric for judging the capabilities of a quantum computer. Some systems are inherently scalable—you could walk into most neutral atom or ion labs and ask the researchers to double the number of atoms or ions in their setups. Within certain limits (such as laser power and the researchers’ willingness to entertain a stranger’s request), this could be done in a matter of minutes. However, the control over these additional qubits would be far from ideal.

Imagine you’re the head of a company, and someone suggests doubling the number of employees to boost production and revenue. Do you think this is you’d immediately have the desired effect? Or would your company face challenges in training and integrating all those new employees, while also incurring unnecessary additional expenses? Similarly, while increasing the qubit count in a quantum computer might be straightforward, the real challenge lies in maintaining precise control over the growing number of qubits.

This is just a reminder that flashy headlines should be approached with a healthy dose of skepticism

Trapped ions

In trapped ion systems connectivity is mediated by the Coulomb repulsion between ions. While this strong interaction enables effective communication between qubits, it also makes precise control more difficult as the number of qubits increases. To address this, researchers have adopted a divide et impera approach, where ions are confined in smaller clusters. High-fidelity operations are performed within these clusters, and by shuttling ions between them, it’s possible to exchange qubits across clusters. As an ion trapper I won't make the common mistake of telling you why ions are the best platform for quantum computing, however you can read more about trapped ions in my blog here.

Neutral atoms

Neutral atoms fall between the two extremes of connectivity. Quantum gates are performed by exciting the atoms to a Rydberg state, where they become highly polarizable. In simple terms, you can think of the atom temporarily behaving like an ion (long range interactions, enhanced connectivity). During this brief period, the interactions between two atoms are significantly enhanced, allowing for quantum gates to be performed. Similar to trapped ions, atoms need to be moved close to each other to carry out a quantum gate. Once the gate is performed and the atoms are de-excited, they can be stored elsewhere (short range interactions, low connectivity) or shuffled for further operations. This approach allows flexible control over the system's connectivity. For instance, an experimental physicist with 1,000 atoms can choose to perform a quantum gate between atom #10 and atom #657. The process involves selecting those two atoms, moving them to a different location, exciting them to the Rydberg state, performing the gate, and then returning them to their original positions. Though it sounds complex, remarkable experiments have demonstrated this level of precise control (see, for example, QuEra and its related videos).

Superconducting Qubits

Although their connectivity is worse compared to other platforms, they have a key advantage: these are engineered systems (often referred to as synthetic atoms). Although in some aspects it might be considered a disadvantage, this allows the system’s connectivity to be designed and customized during the development phase. However, once set, it cannot be changed, and additional techniques are required to perform quantum gates between distant qubits. The challenge is that to entangle qubits far apart, gates must be performed on the qubits linking the two target ones. This becomes impractical if the fidelity of two-qubit gates is low. As a result, this platform heavily relies on Quantum Error Correction and was one of the first to implement it.

2-qubit gates fidelity

So far I mentioned the “degree of control” of qubits but never really specified what I meant with it. Similarly to logic operations in classical computer, a quantum computer requires the ability of performing logical operations its qubits. We can categorize these logic operator in classes depending on the number of qubits that are affected. If the operation targets only 1 qubit we call them single-qubit gates, 2 qubits are two-qubit gates etc. Needless to say, the higher the number of qubits affected the harder is the operation (and its success).

It can be proven that a working quantum computer needs only one- or two-qubit gates. All the N-qubit gates (N > 2) can be decomposed in a series of single and two qubits operations. Clearly, this comes at a cost; we pay the price in computing time. While a 10-qubit gate could be executed in a single operation, its decomposition into simpler gates may require significantly more operations.

In experimental quantum systems, each quantum gate can deviate slightly from the intended outcome. To assess the quality of a gate, we use a metric called fidelity, which measures how closely the gates implemented in the lab match the ideal ones. We can define the fidelity of an Unitary operation as 1:

(1)

where is the ideal unitary operation (quantum gate), is the gate implemented in the lab is the dimensionality (number of qubits) and are tge SU(2) d-dimensional representation of Pauli matrices.

TLDR: Each quantum gate can be defined by a unitary matrix where is the number of qubits affected. The accuracy of this gate can be measured by comparing it to the ideal matrix calculating its fidelity.

Despite recent progress in realizing high-fidelity multi-qubit gates, the majority of logic gates implemented in the lab are still single-qubit () or two-qubit () gates. As expected, single-qubit gates generally have higher fidelity than two-qubit gates, since fewer qubits are involved. When comparing quantum computing platforms, gate fidelity is a more meaningful metric than simply looking at system size. The plot below shows the evolution of two-qubit gate in-fidelity (or 1-F) over the years for superconducting qubits, neutral atoms, and trapped ions.

Note: the lower the y-axis the better.